The introduction of AEM as a Cloud Service marks a new era in the world of AEM development. This post provides a tutorial of integrating Cloud Manager, a new CI/CD tool from Adobe, with existing Jenkins-based approaches to code deployment.

The popularity of cloud infrastructures and serverless designs is still going up. Adobe doesn't fall behind and proposes a myriad of cloud tools meant to revolutionize ways of working with AEM projects. The new solutions bring lots of changes to both developing and deploying the code. DevOps engineers have to face a challenging task of adapting the current deployment pipelines into the new, cloud-based approaches based on Adobe's Cloud Manager.

Introduction

First, let's delve deeper into the subject of code deployments and the way it's done with Cloud Manager.

Usual deployment pipelines

Deploying your code is all about making your software available to the end-users. However, as the project you're working on is growing you might need to perform multiple additional actions revolving around this process. This is where the concept of deployment pipelines comes into play - in most real-world scenarios deploying software is much more than simply pushing your code to the production environment. Deployment pipelines aim to automate as much as possible - all additional tasks related to the deployment are incorporated into a pipeline. This process can be roughly divided into the deployment itself and pre and post deployment steps. When it comes to the actions you might want to perform, the possibilities are limitless. Some of the most commonly implemented pre-deployment steps are:

- static code analysis,

- data backups,

- running unit and integration tests,

- notifying all interested parties about the upcoming deployment.

Post-deployment steps are as much important and may include:

- clearing cache (CDN or other),

- running AET suites,

- running performance tests (e.g. SiteSpeed or Blazemeter),

- deploying the code to other services (e.g FaaS or Docker),

- notifying users.

All these actions combined make for a clear and efficient deployment process. The cost of the initial setup of such a pipeline is relatively high, but after that, each deployment happens automatically.

Cloud Manager - what's that?

If you've already had a chance to work in a project using AEM as a Cloud Service or AEM hosted by AMS, you should already be familiar with Cloud Manager. It is meant to provide a simple, albeit versatile CI/CD pipeline allowing AEM project teams to quickly deploy code to AEM hosted by Adobe in the cloud. The most important feature provided by CM is building and deploying the code, but it is much richer than that. The tool has lots of features designed to make the whole process as robust and fail-proof as possible. Apart from pushing the code to the final environment, it can handle:

- quality checks including code inspection, performance testing and security validation,

- scheduling deployments and triggering them based on events such as code commits,

- autoscaling by automatically detecting the need to increase the available resources.

As you can see, Adobe took the effort to develop a more or less self-standing solution covering some basic DevOps needs. However, it is a cookie-cutter solution, which, while taking some burden off DevOps engineers' shoulders, certainly doesn't check all the boxes. The possibilities of configuring CM are very limited in its current version. Worst of all, Cloud Manager is the only way of deploying the code if you use either AEM as a Cloud Service or AMS-hosted AEM. This means there's no way around it - you have to use Cloud Manager in such projects.

As a result, migrating your AEM project to Adobe's cloud solutions is even more troublesome - you might have pretty advanced deployment pipelines, which are now rendered useless. To overcome this difficulty and save the existing functionalities, you have to come up with a way of combining the two worlds - Cloud Manager and existing CI/CD tools we all know and love, such as Jenkins or Bamboo.

The solution presented in this post can also help you when creating a new project from scratch. By using the code presented, you can quickly set up your deployment process. Combined with no need to provision your AEM instances yourself, you can focus on implementing the business logic from the very beginning of the project.

The plan

We'll try to integrate CM with Jenkins - one of the most popular CI/CD tools in the world. The result we want to achieve is a Jenkins pipeline doing all the things ordinary pipeline would do, but substituting the standard AEM deployment (e.g done with the help of GAP plugin) with the Cloud Manager implementation. This way, we only change a single piece of the whole puzzle and thus keep all the other pipeline steps intact. It's a win-win situation.

Let's move to the design of our approach. The most important part of the whole solution is communication between Jenkins and Cloud Manager. Fortunately, Adobe offers AIO CLI tool, which is capable of doing any CM action you'd normally do in the browser directly from the command line interface. It's a perfect tool to use to automate triggering CM builds. It takes care of one-way (Jenkins to CM) communication, so we still need a way of informing Jenkins about CM events in order to display build status in the Jenkins interface. Luckily, CM has a system of events, which can be set up to either trigger an HTTP webhook or call an Adobe I/O Runtime action. The latter is especially enticing since it requires way less development, but we'll describe this in detail later.

Concluding, the approach we want to take is shown in the graph below.

The solution comprises three main blocks - a Jenkins instance, Cloud Manager, and the Adobe I/O Runtime platform. As you can see, the communication is based on callbacks. Each block performs some actions and delegates processing to another element of the sequence when finished.

The processing starts with the Jenkins job. It performs the following steps:

- any pre-deployment actions,

- synchronizing development and Adobe's git repositories. This is necessary since CM fetches code from a specific repository set up by Adobe. In particular, you can push your code directly into the Adobe's repository and omit this step altogether. The reason we prefer to keep two separate repositories is that it allows for more freedom. We might, for instance, host our repo on Bitbucket and seize all the benefits that come with it, such as a powerful web interface or Jira integration,

- triggering a CM build,

- exposing a unique public webhook and waiting for a call with build status details.

After finishing the build, Cloud Manager calls the I/O Runtime action, which is responsible for:

- calling the CM REST API to fetch more details about the execution (i.a. whether it was successful or not),

- letting Jenkins know about the final status of the deployment.

From the Jenkins standpoint, the only part different from a classic AEM deployment pipeline is the way the deployment itself takes place. Instead of deploying the code itself, Jenkins triggers an external process and waits asynchronously for it to finish. It's worth mentioning that there is also a possibility to poll CM about the finish status, but setting up an event handler makes more sense. Asynchronous processing doesn't require as many resources, which in turn can be used to take care of other stuff in the meantime. However, polling might be necessary if you can't expose your Jenkins instance to the public. In this case, sending messages from the Runtime action to Jenkins is not possible, so the job has to repeatedly ask CM about the build status and fetch any additional details itself.

To make the solution as universal and maintenance-free as possible, we'd like to ship a Jenkins instance already provisioned for working with Cloud Manager. Docker seems like a perfect tool to use in this case. Containerizing Jenkins in such a way that it already has all the tools (AIO CLI, Jenkins plugins) preconfigured reduces the time and effort it takes to kick off a CM project.

Implementation

Let's leave the drawing board and start implementing the solution described above.

Jenkins

We'd like to have a Jenkins instance capable of communicating with CM. Additionally, it'd be nice if it came with a basic CM deployment pipeline preconfigured. To achieve this task we'll use Docker and Jenkins Configuration as Code (a.k.a. JCasC) Plugin. Docker will allow us to create a shippable image of a preconfigured Jenkins image so that it can be simply pulled and built every time a new project starts. With the help of JCasC plugin, we'll define all Jenkins configuration using code, without the need to define it manually using the web interface.

First of all, let's think of all the tools that our Jenkins instance would need. When it comes to the Jenkins plugins,

let's prepare a plugins.txt file with all the plugins listed, along with their versions. This file will be later used

to install them on the Docker container using the

install-plugins.sh script. The plugins we need

are:

git:4.3.0

job-dsl:1.77

configuration-as-code:1.43

workflow-aggregator:2.6

webhook-step:1.4

pipeline-utility-steps:2.6.1The list is pretty straightforward, git will be needed to pull code and synchronize repositories, job-dsl and

configuration-as-code are used to define Jenkins configuration and jobs directly in code. Then, workflow-aggregator

allows for defining pipelines and webhook-step makes it possible to have a webhook as an input step in a pipeline.

Lastly, pipeline-utility-steps will be used to parse a JSON fetched from a CM event.

As for the tools required by the container Jenkins is going to run in, the list is:

git- to synchronize Adobe's and development repositories,aio-cli- to trigger CM builds.

Assuming we have everything set up, we can think of the pipeline code. Due to its complexity, we'll extract the code responsible for synchronizing the repositories to a separate pipeline, which will be later used by the final deployment pipeline. Altogether, we end up with two pipelines:

To implement it, we're going to use the pipeline plugin and write the code in a mix of declarative and imperative code. You can find the full code here. The pipeline consists of multiple steps:

- dummy pre-deployment step. This can be later changed in a real project to any arbitrary actions, such as sending notifications, performing tests etc.,

- repositories synchronization,

- triggering a CM build and waiting for a POST request from the Adobe I/O Runtime action. The execution ID is parsed based on the response from the AIO CLI and is later used to expose a unique webhook endpoint,

- post-deployment steps.

Now, let's think of the way we want to configure our Jenkins instance. Using the Jenkins Configuration as Code, it is

all done in yaml files. Among standard things, we'll need to specify three sets of credentials - to the Jenkins instance

itself and two sets of git repository credentials, one for the development repository and one for the Adobe one. We end

up with the following jenkins.yml file:

jenkins:

authorizationStrategy:

loggedInUsersCanDoAnything:

allowAnonymousRead: false

crumbIssuer:

standard:

excludeClientIPFromCrumb: false

disableRememberMe: false

mode: NORMAL

numExecutors: 4

primaryView:

all:

name: 'all'

quietPeriod: 5

scmCheckoutRetryCount: 0

securityRealm:

local:

allowsSignup: false

enableCaptcha: false

users:

- id: @JENKINS_USERNAME@

password: @JENKINS_PASSWORD@

slaveAgentPort: 50001

views:

- all:

name: 'All'

credentials:

system:

domainCredentials:

- credentials:

- usernamePassword:

scope: GLOBAL

id: dev-repo-credentials

username: @DEV_REPO_USERNAME@

password: @DEV_REPO_PASSWORD@

- usernamePassword:

scope: GLOBAL

id: adobe-repo-credentials

username: @ADOBE_REPO_USERNAME@

password: @ADOBE_REPO_PASSWORD@You should change all the credentials tokens (in @TOKEN@ form) to real data. We need one more configuration file -

jobs.yml. In this file, we'll store all references to the jobs we've created:

jobs:

- file: /usr/share/jenkins/ref/casc/jobs/job-prod-deploy.groovy

- file: /usr/share/jenkins/ref/casc/jobs/job-stg-deploy.groovy

- file: /usr/share/jenkins/ref/casc/jobs/job-sync-repos.groovyJenkins-wise, we now have everything ready and can proceed to the next steps.

Adobe I/O Runtime action

It's now time to develop our Adobe I/O Runtime action, which will handle incoming CM events and notify Jenkins about their status. We'll use Project Firefly to set up the execution environment we'll deploy our actions to. You can find the instructions of setting it up here.

When configuring the project, make sure to add Cloud Manager API integration. It will allow you to perform CM actions,

query for build data, and react to deployment events. To add the integration, go to your project in Adobe's IO Console,

click the Add to project button, select API from the drop-down menu, and find Cloud Manager. You can find the

detailed instruction

here.

After creating the project, let's use Adobe I/O Extensible CLI to create a

scaffolding for our action. Let's execute the following command to create a new Adobe I/O app under app directory:

aio app init appWhen prompted, choose the Firefly project you've created before. When asked for Adobe I/O features to include in the app, select "Actions". After some processing, you should end up with a neat project structure, ready to develop your custom action.

Firstly, we need to provide some parameters for our action. Find the .env file and add the following properties to it:

# Jenkins endpoint to call

JENKINS_WEBHOOK_URL=

# Adobe authentication info

ORGANIZATION_ID=

TECHNICAL_ACCOUNT_EMAIL=

CLIENT_ID=

CLIENT_SECRET=

META_SCOPE=

PRIVATE_KEY=Jenkins URL should have the {JENKINS_URL}:{JENKINS_PORT}/webhook-step form. All authentication information can be

extracted from the "Credentials" sections in the Adobe Developer Console.

Next, let's add our action code. First, create a new directory for our action: actions/notify-jenkins. Let's put two

files for our code there: index.js and helpers.js. We'll extract any axillary functions into the helpers.js file

and keep the main one as concise as possible. Now, let's create a script responsible for parsing the CM event, fetching

more build data from the CM REST API, and, lastly, calling the Jenkins webhook with the data extracted. We'll put the

code in the index.js file:

const axios = require('axios');

const {

fetchAccessToken,

isEventPipelineFinished,

extractExecutionStatus,

getPipelineId,

getExecutionId,

} = require('./helpers');

async function main(params) {

const event = params.event;

let dataToSend = undefined;

if (isEventPipelineFinished(event)) {

const accessToken = await fetchAccessToken(params);

dataToSend = await extractExecutionStatus(event, accessToken, params);

const pipeline = getPipelineId(event);

const execution = getExecutionId(event);

await axios.post(`${params.JENKINS_WEBHOOK_URL}/${pipeline}-${execution}`, dataToSend);

}

return {

statusCode: 200,

body: dataToSend,

};

}

exports.main = main;As a first step, at line 13, we verify that the event received actually comes from a finished pipeline build. At lines

14 and 15, we fetch a JWT token and call the CM REST API to retrieve details about the execution. Next two lines simply

extract the build IDs necessary to construct the webhook URL. Lastly, at line 18, we send the execution data to the

webhook via a POST request. More implementation details can be found in the helpers.js file

here.

The whole project structure is accessible

here.

Before deploying the action, we have to modify the manifest.yml file. This file contains metadata about the action and

stores all the inputs passed to the action as its parameters. The final manifest.yml configuration file looks as

follows:

packages:

__APP_PACKAGE__:

license: Apache-2.0

actions:

notify-jenkins:

function: actions\notify-jenkins\index.js

web: 'yes'

runtime: 'nodejs:12'

inputs:

JENKINS_WEBHOOK_URL: $JENKINS_WEBHOOK_URL

ORGANIZATION_ID: $ORGANIZATION_ID

TECHNICAL_ACCOUNT_EMAIL: $TECHNICAL_ACCOUNT_EMAIL

CLIENT_ID: $CLIENT_ID

CLIENT_SECRET: $CLIENT_SECRET

PRIVATE_KEY: $PRIVATE_KEY

META_SCOPE: $META_SCOPEIn the inputs section, we copy the parameters from the .env file.

Finally, the last thing remaining is deploying the action by calling:

aio app deployThe action should be now available on the Adobe I/O Runtime platform.

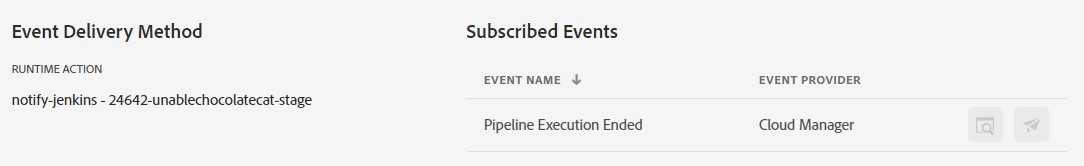

Cloud Manager

After deploying the action, it's time to configure an event handler in the Cloud Manager web interface. The process of setting up an event handler is described in the documentation. In the end, it is as simple as choosing a "Pipeline Execution Ended" event and selecting the action we've deployed before.

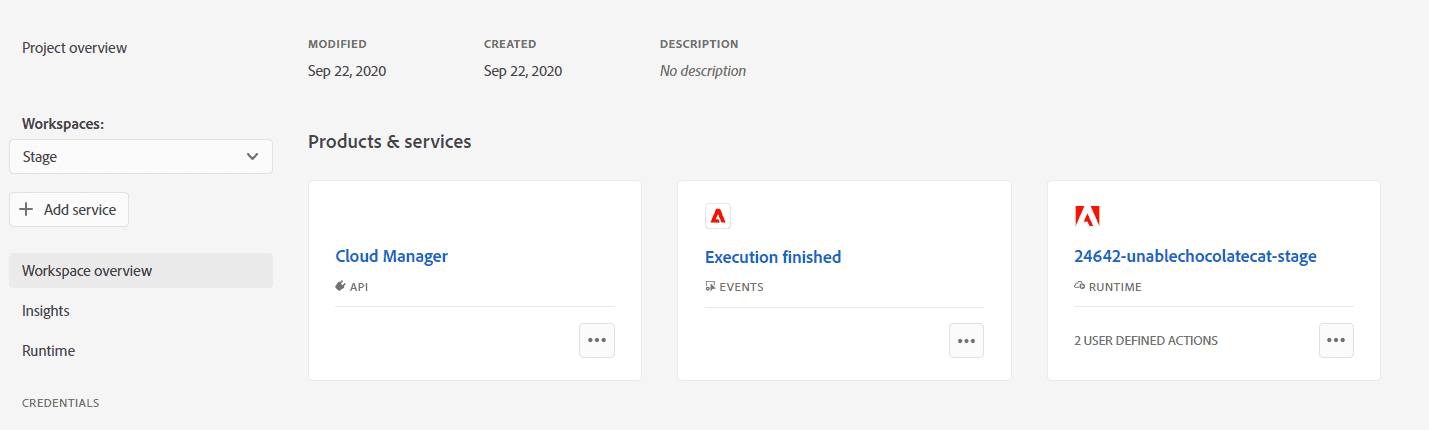

In the end, your project's detail page should look somewhat like this, with the CM API integration added, Runtime action deployed, and an event handler specified:

It's worth pointing out why the approach of using a Runtime action as an event handler is superior to setting up your own HTTP webhook. Adobe requires the event handler to accept two different types of requests:

- GET request with a

challengequery string parameter (the request must return the parameter value as a response), - POST request with event details (the endpoint has to respond with a 200 status code).

Additionally, in order for the webhook to be secure, a signature validation has to be performed upon receiving a POST

request. Every request coming from Cloud Manager is signed using a SHA256 HMAC stored in the x-adobe-signature header.

This means that your webhook event handler must contain extra logic just to make your endpoint secure (you can find the

signature validation code

here)

and in accordance with the Adobe's requirements.

Fortunately, by using a Runtime action as an event handler, you are freed from this burden. All the boilerplate stuff is delegated to a dedicated action provided out of the box by Adobe. This action is the one being invoked directly by the Cloud Manager. It has all the necessary endpoints already set up and takes care of the signature validation for you before passing the event further to your custom action.

Your custom action is called based on triggers and rules, which are configured automatically when you select your action

as an event handler in the Adobe Developer Console (if you want to know more about OpenWhisk actions, triggers, and

rules, check out the documentation). In our example, the rule states

that the notify-jenkins action is to be called in response to a cloudmanager_pipeline_execution_end trigger. The

OpenWhisk model is presented in the diagram below.

The entry action, as well as the mechanism of triggers and rules, is provided by Adobe so you can focus solely on developing business logic of your custom action.

Having saved everything on the Adobe Console side, we can now proceed to tidying all the pieces together.

Docker

So far, we have created Jenkins jobs and deployed an I/O Runtime action. It's now time to make the whole solution shippable by creating a docker image. Inside the Dockerfile, we want to install and configure the AIO CLI, download all the necessary Jenkins plugins and copy the Jenkins configuration. Putting it all together, we end up with the following Dockerfile:

FROM jenkins/jenkins:2.235.5

# Set up some variables

# Skip jenkins initial wizard

ENV JAVA_OPTS -Djenkins.install.runSetupWizard=false

# Directories for storing various files needed by Jenkins

ENV JENKINS_REF /usr/share/jenkins/ref

ENV CASC_JENKINS_CONFIG ${JENKINS_REF}/casc

# Jenkins URL, needed by the webhook step plugin

ENV JENKINS_URL http://localhost:8080

# Adobe IO program ID used by AIO CLI

ENV AIO_PROGRAM_ID 0000

# Install node + AIO CLI

USER root

RUN apt-get update -y && apt-get upgrade -y

RUN curl -sL https://deb.nodesource.com/setup_10.x | bash

RUN apt-get install -y nodejs

RUN npm install -g @adobe/aio-cli

RUN aio plugins:install @adobe/aio-cli-plugin-cloudmanager

# Set up AIO authentication

COPY ./data/config.json ${JENKINS_REF}/aio/config.json

COPY ./data/private.key ${JENKINS_REF}/aio/private.key

RUN aio config:set jwt-auth ${JENKINS_REF}/aio/config.json --file --json

RUN aio config:set jwt-auth.jwt_private_key ${JENKINS_REF}/aio/private.key --file

RUN aio config:set cloudmanager_programid ${AIO_PROGRAM_ID}

# Copy Jenkins configuration

COPY ./config/*.yml ${CASC_JENKINS_CONFIG}/

COPY ./config/jobs/* ${CASC_JENKINS_CONFIG}/jobs/

COPY ./config/pipelines/* ${CASC_JENKINS_CONFIG}/pipelines/

# Install Jenkins plugins

COPY ./config/plugins.txt ${JENKINS_REF}/plugins.txt

RUN /usr/local/bin/install-plugins.sh < ${JENKINS_REF}/plugins.txtFor configuration purposes, we'll copy some files into our container: config.json and private.key. These file store

credentials extracted from the Adobe Developer Console and are needed for setting up AIO CLI authentication (the process

is described here). Additionally, all Jenkins configuration has

to be copied - this includes jobs and pipelines code as well as plugins.txt file containing references to all the

plugins required by our solution. Change the references to these files in the Dockerfile above if you have a different

file structure.

Now, let's build the image and run the container using the following commands:

docker build --tag cm-jenkins

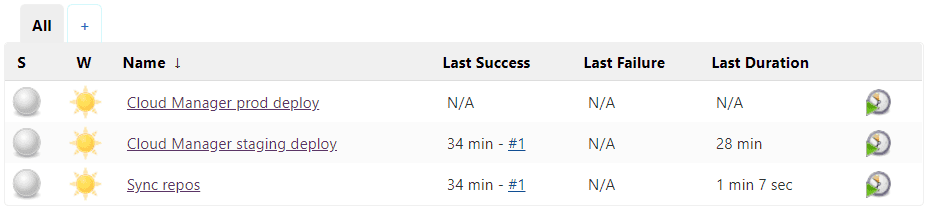

docker run --publish 8000:8080 --detach cm-jenkinsOnce everything is finished, a brand-new Jenkins instance should be available at http://localhost:8080. You should see three jobs already set up for you.

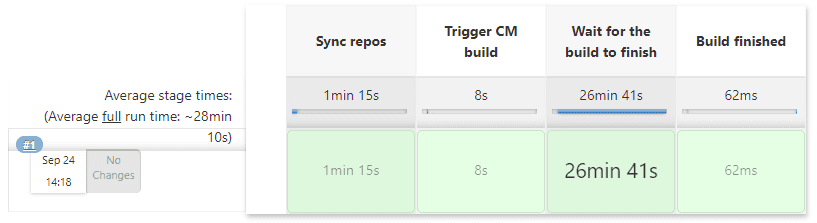

Let's log in and verify one of the pipelines by running it.

As you can see, everything went smoothly. You can look at the logs and see that the repositories were synchronized, the build was triggered, and an event received.

Voila! You are now free to extend the boilerplate pipelines by adding any additional pre and post deployment steps you wish.

Summary

In this tutorial, we went through the process of integrating Cloud Manager, a novel CI/CD solution, with an existing tool - Jenkins. Combining these two solutions allows you to use the newest technologies provided by Adobe without abandoning your preexisting processes. It's a foregone conclusion that AEM as a Cloud Service is only going to gain traction in the upcoming years. It is thus extremely important to get to know the cloud solutions and integrate them into your company's solutions if you don't want to fall behind. Soon, Cloud Manager might become the only option - and you should come prepared.