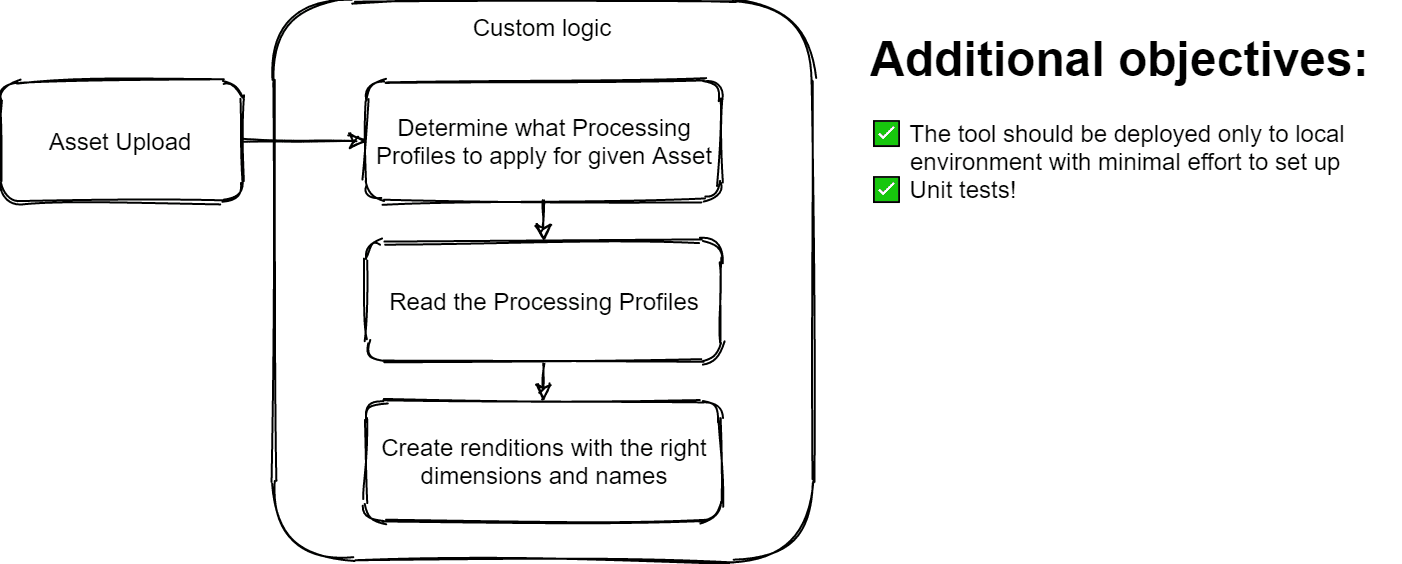

How to recreate the process of handling renditions in AEM as a Cloud Service in local development using workflows.

Renditions in AEM as a Cloud Service

AEM as a Cloud Service introduces a different approach to handling Assets. The Assets are no longer stored within AEM itself. Instead, they are stored in cloud binary storage. As for Asset processing, the work is delegated to cloud-native Asset microservices.

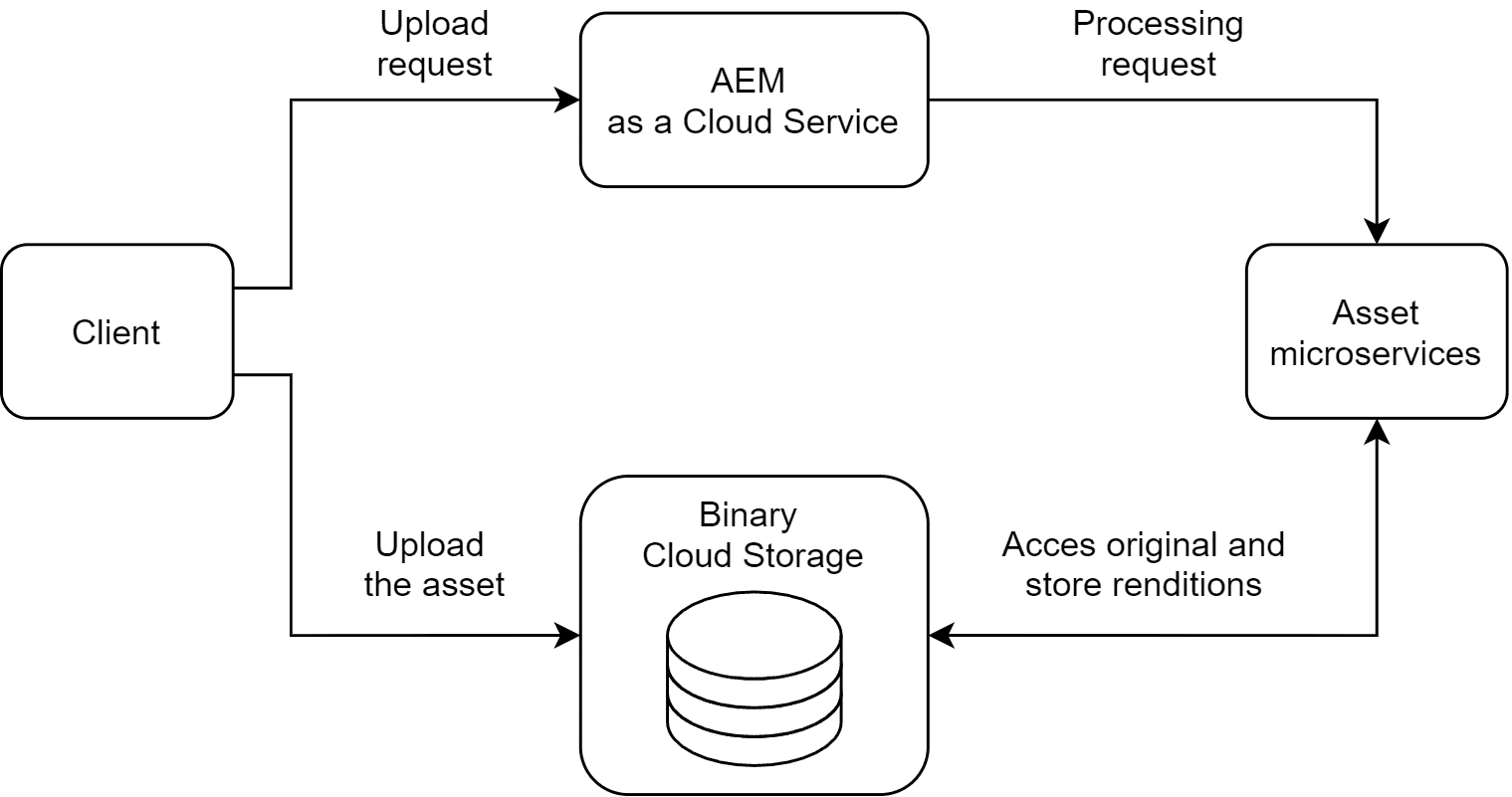

A simplified version of the diagram available in the official Adobe documentation is presented below.

As seen in the diagram, the processing starts with the Client requesting the Asset upload. The actual upload is performed directly to the Binary Cloud Storage. Upon completion of the upload, AEM requests the Asset microservices to process the renditions. The Asset microservices architecture is then obtaining the original Asset binary from Binary Cloud Storage, performing the requested actions on the image, and finally stores the renditions back in Binary Cloud Storage.

Local Development

AEM as a Cloud Service SDK

Local development is supported by the AEM as a Cloud Service SDK. The process of setting up the runtime locally is quite simple and well documented.

However...

The AEM as a Cloud Service SDK is not a 1:1 copy of the actual runtime that's running in the cloud.

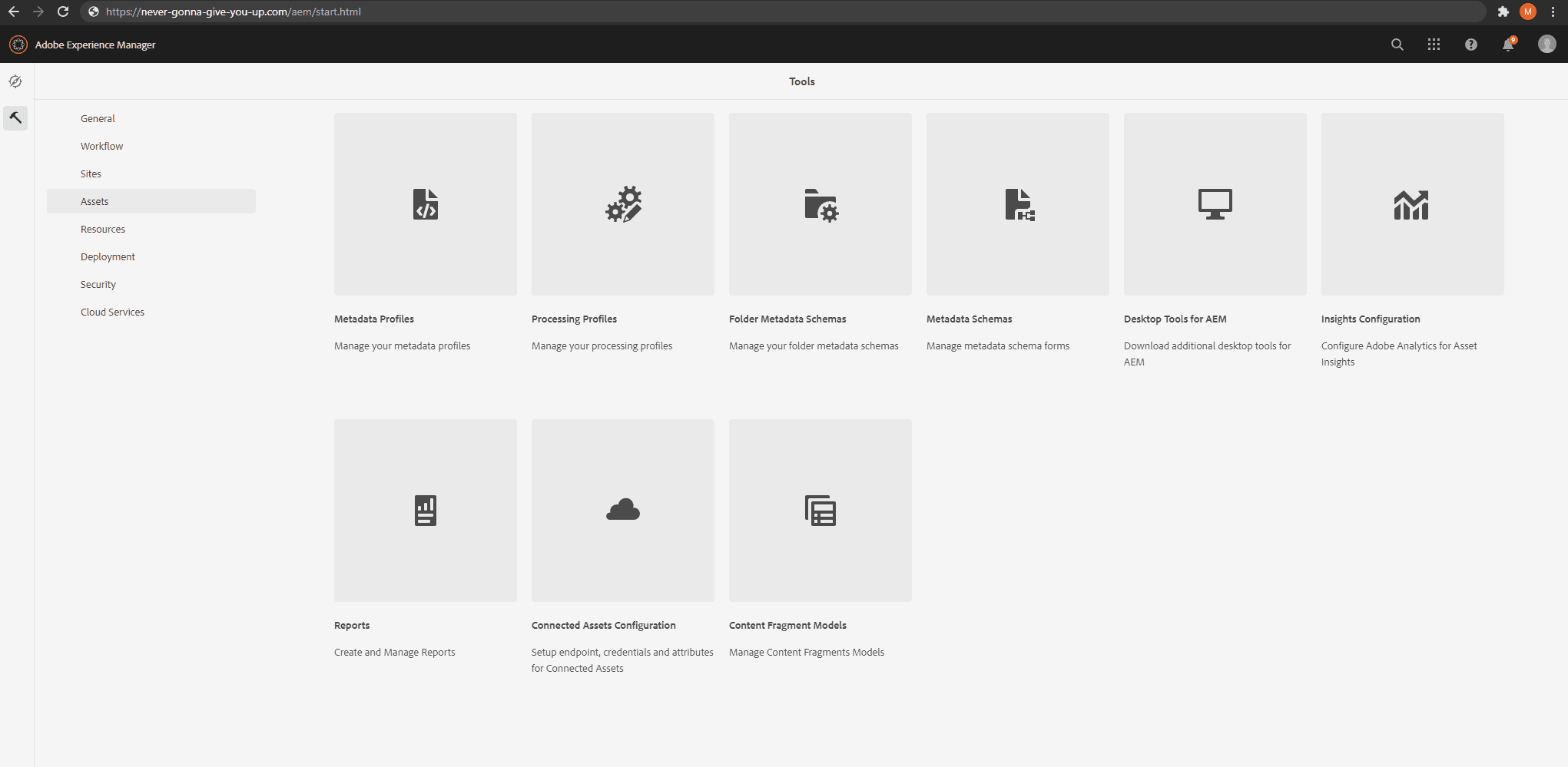

You can probably already guess where this is going... You will encounter numerous differences in how the local development behaves, compared to the Cloud. Let's quickly jump into Tools ➡ Assets to create a Processing Profile to define some renditions to be generated.

Let's take a look at the Cloud environment first.

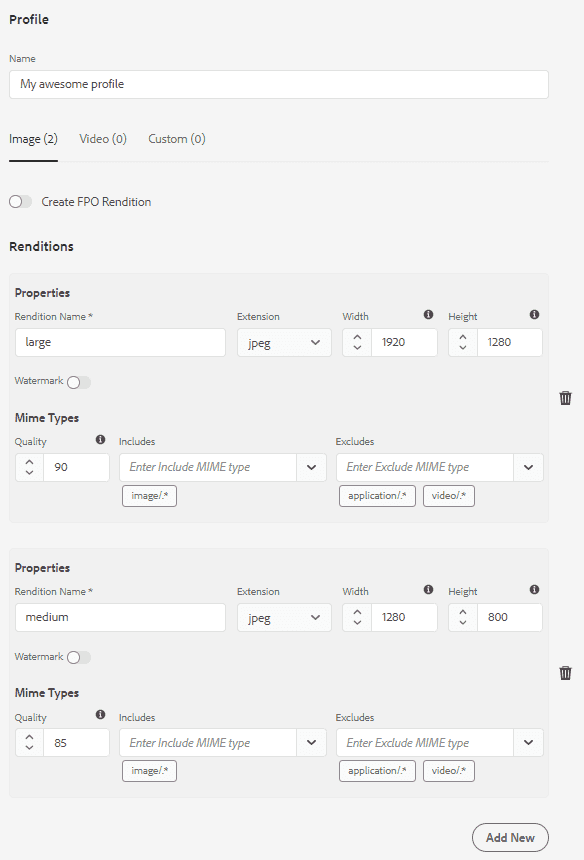

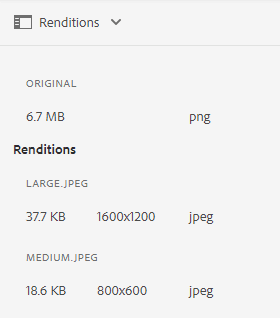

We'll be creating a Processing Profile with two renditions: large and medium.

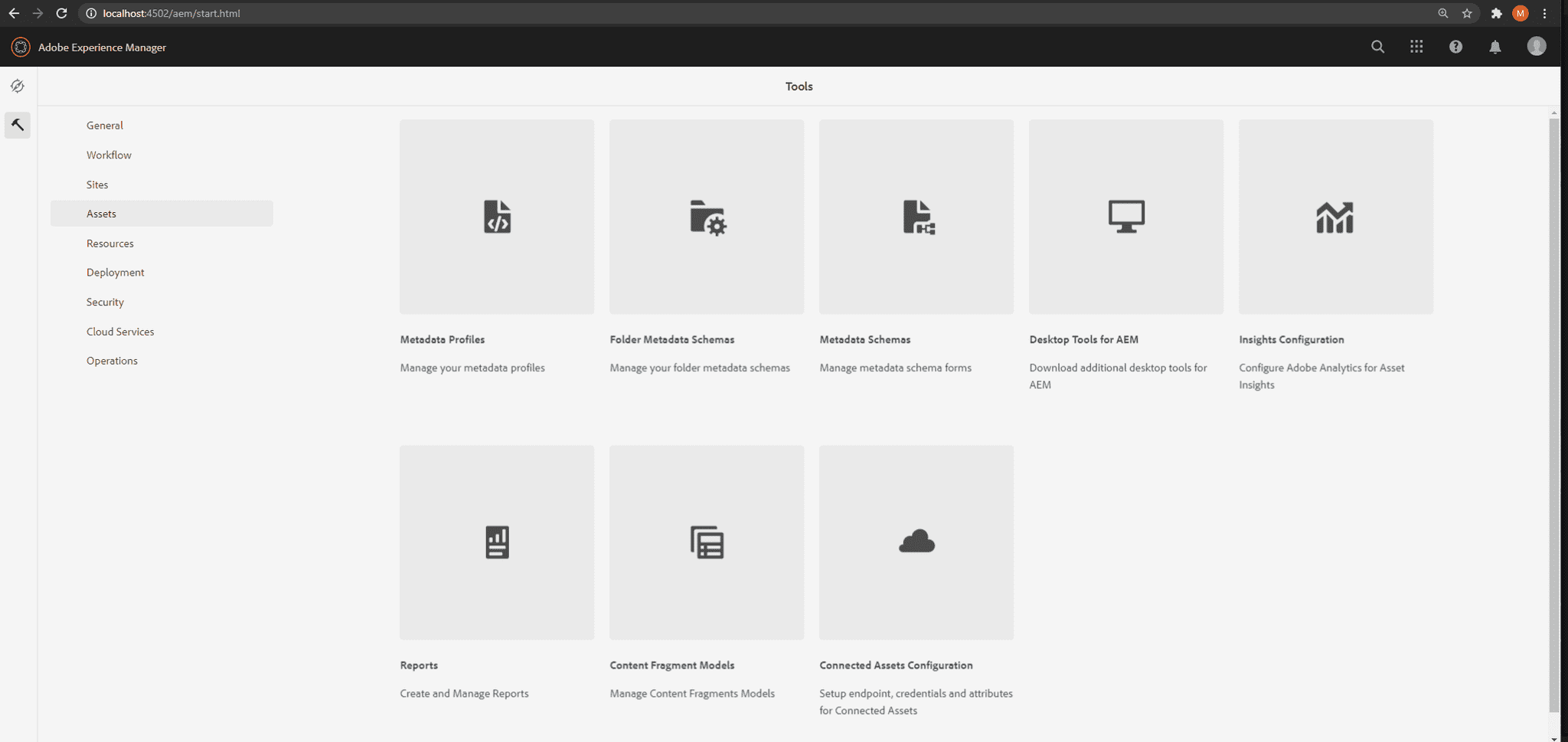

And then, on the local environment.

Whoopsie! There is no Processing Profiles tile on the local environment!

In fact, the whole fancy Cloud/microservices process is absent here.

But we want to have renditions and the same experience of uploading an Asset to AEM locally, right?

What can't be done?

Ideally, we would want to recreate the whole process locally. That would require creating some form of Binary Cloud Storage for storing the Assets for each developer and their local development environment. Then, we would have to implement a simple microservice that would process Assets into renditions. Finally, we would have to reverse-engineer a solution that would exactly mimic the communication between our Binary Cloud Storage, local AEM instance, and our Asset microservices.

Sounds painful, right?

Let's review what we actually want to see on the local development side.

Upon uploading an Asset, we want to have exactly the same renditions as in the Cloud. We don't want to (and are not able to) exactly mirror the behavior of communication between Adobe's Cloud entities.

Let's make some renditions then!

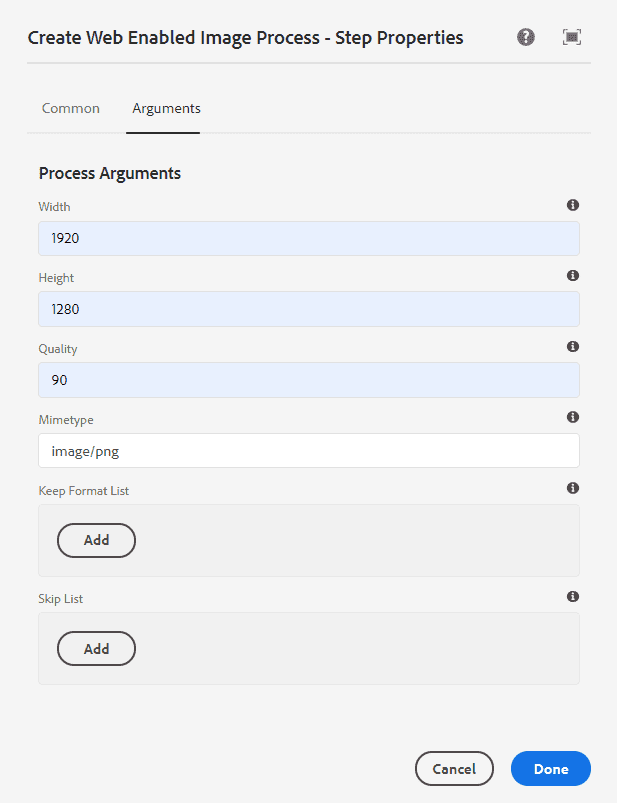

The first thing that comes to mind is to add a Create Web Enabled Image Process step to the DAM Update Asset workflow.

We can specify the dimensions, mime types, quality just as in Processing Profiles. Looks like we have everything in place. Neat!

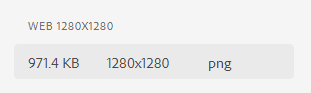

Let's sync the workflow, upload some image, and see what the renditions are.

The file is named cq5dam.web.1280.1280.png

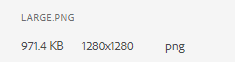

Now, let's compare it to the same rendition created in Cloud.

The actual file is named large.png

Not perfect, right? The name of the rendition is different. Indeed, in Processing Profiles, the name of the rendition

can be specified, whereas Create Web Enabled Image Process will save rendition in

cq5dam.web.<width>.<height>.<extension> format. What's more: Processing Profiles can be assigned to a specific folder,

whereas the workflow will be triggered for all Assets, regardless of what directory they're uploaded into.

Looks like we have to develop some solution ourselves.

To the drawing board!

Gradle build

First things first. Let's set up the build.

We'll be using Gradle, or more specifically: the Gradle AEM Plugin (GAP in short) because it's extremely easy to set up and deploy the package on your local environment. Note however, that the AEM Project Archetype, which is Adobe's recommended template for the Experience Cloud projects, uses Maven. In order to prevent this module from being deployed on Cloud environments, use Maven profiles, as described in this documentation.

We'll set up a simple build script using Kotlin DSL.

Content of build.gradle.kts

plugins {

val gradleAemPluginVersion = "14.2.8"

id("com.cognifide.aem.instance.local") version gradleAemPluginVersion

id("com.cognifide.aem.bundle") version gradleAemPluginVersion

id("com.cognifide.aem.package") version gradleAemPluginVersion

}

group = "com.mysite"

repositories {

jcenter()

mavenCentral()

maven("https://repo.adobe.com/nexus/content/groups/public")

}

java {

// JDK 11, nice! 😎

sourceCompatibility = JavaVersion.VERSION_11

targetCompatibility = JavaVersion.VERSION_11

}

tasks {

test {

failFast = true

useJUnitPlatform()

testLogging.showStandardStreams = true

}

}

dependencies {

// try to use the latest version!

implementation("com.adobe.aem:aem-sdk-api:2020.6.3766.20200619T110731Z-200604")

// we'll be using Lombok

compileOnly("org.projectlombok:lombok:1.18.12")

annotationProcessor("org.projectlombok:lombok:1.18.12")

testCompileOnly("org.projectlombok:lombok:1.18.12")

testAnnotationProcessor("org.projectlombok:lombok:1.18.12")

testImplementation("org.junit.jupiter:junit-jupiter-api:5.6.2")

testRuntimeOnly("org.junit.jupiter:junit-jupiter-engine:5.6.2")

testImplementation("org.mockito:mockito-core:2.25.1")

testImplementation("org.mockito:mockito-junit-jupiter:2.25.1")

testImplementation("junit-addons:junit-addons:1.4")

testImplementation("io.wcm:io.wcm.testing.aem-mock.junit5:2.5.2")

testImplementation("uk.org.lidalia:slf4j-test:1.0.1")

}This is a VERY minimal configuration for a project using Gradle AEM Plugin. If you want to have a peek of its full power, see this article, or if you want to see how it can be used with AEM Project Archetype, see this article.

By using Gradle AEM Plugin, we'll be able to compile our code and package it to a bundle and then build a package that

will have the bundle embedded along with any JCR content nodes we'll develop. The package can be then automatically

uploaded to our localhost:4502 AEM instance!

Workflow Process Step

Now that we're all set up, let's investigate our options on hooking into an event of uploading an Asset locally.

The first and the best guess is the DAM Update Asset workflow. Since this workflow, by default, will be executed upon each Asset upload, we can add our custom code into it in a form of a Custom Process Step included in the workflow pipeline.

Let's start with creating a WorkflowProcess implementation (take care to import this interface from the right

package!).

Content of src/main/java/com/mysite/local/tools/workflow/LocalRenditionMakerProcess.java

package com.mysite.local.tools.workflow;

import com.adobe.granite.workflow.WorkflowSession;

import com.adobe.granite.workflow.exec.WorkItem;

import com.adobe.granite.workflow.exec.WorkflowProcess;

import com.adobe.granite.workflow.metadata.MetaDataMap;

import lombok.extern.slf4j.Slf4j;

import org.osgi.framework.Constants;

import org.osgi.service.component.annotations.Component;

/**

* Create renditions as AEM as a Cloud Asset microservices would create.

*/

@Slf4j

@Component(property = {

Constants.SERVICE_DESCRIPTION + "=Generate renditions as in Cloud",

Constants.SERVICE_VENDOR + "=Cognifide",

"process.label" + "=Generate Cloud renditions"

})

public class LocalRenditionMakerProcess implements WorkflowProcess {

@Override

public void execute(WorkItem workItem, WorkflowSession workflowSession, MetaDataMap metaDataMap){

// TODO: Implementation!

}

}The first thing we need to do is to determine which Asset we're dealing with here. The path to the Asset is embedded in

workItem object.

Let's create a static util method that will retrieve this value:

Content of src/main/java/com/mysite/local/tools/workflow/WorkflowUtil.java

static String getAssetPath(WorkItem workItem) {

return Optional.ofNullable(workItem.getWorkflowData().getPayload())

.filter(String.class::isInstance)

.map(String.class::cast)

// there are cases when the path points to /jcr:content/renditions/original

.map(path -> StringUtils.substringBefore(path, "/jcr:content"))

.orElse(StringUtils.EMPTY);

}Quite self-explanatory. We just fetch the String payload and trim it, when it's needed.

We can set up a test for this method right away!

Content of src/test/java/com/mysite/local/tools/workflow/WorkflowUtilTest.java

private WorkItem workItem;

private WorkflowData workflowData;

@BeforeEach

void setUp() {

workItem = mock(WorkItem.class);

workflowData = mock(WorkflowData.class);

when(workItem.getWorkflowData()).thenReturn(workflowData);

}

@Test

@DisplayName("Given valid path to Asset, When getAssetPath, Then return valid asset path")

void testGetAssetPathWithValidPath() {

when(workflowData.getPayload()).thenReturn("/content/dam/someAsset.with.dots.png");

String actual = WorkflowUtil.getAssetPath(workItem);

assertEquals("/content/dam/someAsset.with.dots.png", actual);

}

@Test

@DisplayName("Given null as path to Asset, When getAssetPath, Then return empty string")

void testGetAssetPathWithNull() {

when(workflowData.getPayload()).thenReturn(null);

String actual = WorkflowUtil.getAssetPath(workItem);

assertEquals(StringUtils.EMPTY, actual);

}

@Test

@DisplayName("Given path to Asset original rendition, When getAssetPath, Then return valid asset path")

void testGetAssetPathWithOriginalRenditionPath() {

when(workflowData.getPayload()).thenReturn("/content/dam/test/test2/test3/43.png/jcr:content/renditions/original");

String actual = WorkflowUtil.getAssetPath(workItem);

assertEquals("/content/dam/test/test2/test3/43.png", actual);

}Obtaining Processing Profiles

Now that we're aware of which Asset we'll be dealing with here, we need to obtain a set of Processing Profiles to apply for this Asset.

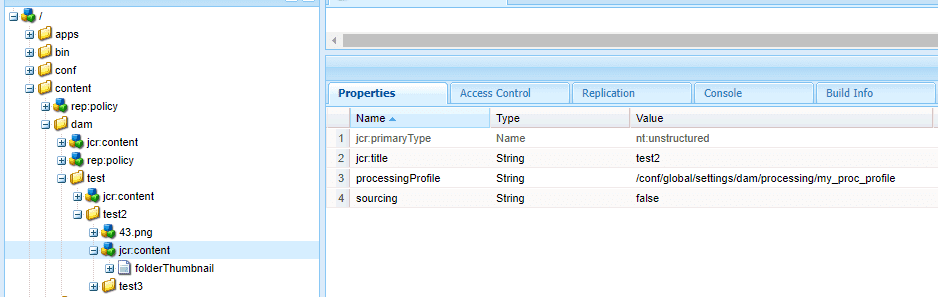

The information, on what Processing Profile was applied to the given DAM folder is stored in jcr:content node in the

processingProfile property. It's worth noting, that only one Processing Profile can be applied to one folder. However,

an Asset will also be influenced by Processing Profiles set on any of its ancestor folders. The upper limit is the

/content/dam folder.

After obtaining the Asset resource, we can traverse up in the DAM node tree and fetch all the Processing Profiles to a set of paths.

Content of src/main/java/com/mysite/local/tools/workflow/ProcessingProfilesUtil.java

static Set<String> getProcessingProfilePathsToApply(Resource assetResource) {

return (new DamNodesIterator(assetResource)).toStream()

.map(optResource ->

optResource.map(resource -> resource.getChild(JcrConstants.JCR_CONTENT))

.map(Resource::getValueMap)

.map(jcrContentValueMap -> jcrContentValueMap.get("processingProfile"))

.filter(String.class::isInstance)

.map(String.class::cast)

)

.flatMap(Optional::stream)

.collect(Collectors.toSet());

}

private static class DamNodesIterator implements Iterator<Optional<Resource>> {

private Optional<Resource> optResource;

DamNodesIterator(Resource resource) {

optResource = Optional.of(resource);

}

@Override

public boolean hasNext() {

return optResource.map(Resource::getPath)

.map(path -> StringUtils.startsWith(path, "/content/dam/"))

.orElse(false);

}

@Override

public Optional<Resource> next() {

optResource = optResource.map(Resource::getParent);

return optResource;

}

Stream<Optional<Resource>> toStream() {

return StreamSupport.stream(

Spliterators.spliteratorUnknownSize(this, Spliterator.ORDERED),

false

);

}

}Now, let's test this fine piece of code.

We'll be using AEM Mocks to mock a JCR content tree. The structure of our mock is

the following and will be mocked under /content node.

Content of src/test/resources/contentSamples/dam.json

{

"jcr:content": {

"jcr:primaryType": "nt:unstructured",

"processingProfile": "/conf/global/settings/dam/processing/this-should-not-be-reached"

},

"dam": {

"jcr:primaryType": "sling:Folder",

"jcr:content": {

"jcr:primaryType": "nt:unstructured",

"jcr:title": "Assets",

"processingProfile": "/conf/global/settings/dam/processing/profile-from-repo2"

},

"test": {

"jcr:primaryType": "sling:Folder",

"test2": {

"jcr:primaryType": "sling:Folder",

"jcr:content": {

"jcr:primaryType": "nt:unstructured",

"jcr:title": "test2"

},

"test3": {

"jcr:primaryType": "sling:Folder",

"jcr:content": {

"jcr:primaryType": "nt:unstructured",

"jcr:title": "test3"

},

"43.png": {

"jcr:primaryType": "dam:Asset"

}

}

},

"jcr:content": {

"jcr:primaryType": "nt:unstructured",

"jcr:title": "test",

"processingProfile": "/conf/global/settings/dam/processing/profile-from-repo"

}

},

"test-evil": {

"jcr:primaryType": "sling:Folder",

"test2": {

"jcr:primaryType": "sling:Folder",

"jcr:content": {

"jcr:primaryType": "nt:unstructured",

"jcr:title": "test2",

"processingProfile": "/conf/global/settings/dam/processing/im-evil"

},

"test3": {

"jcr:primaryType": "sling:Folder",

"jcr:content": {

"jcr:primaryType": "nt:unstructured",

"jcr:title": "test3"

}

}

},

"jcr:content": {

"jcr:primaryType": "nt:unstructured",

"jcr:title": "test",

"sourcing": "false",

"processingProfile": "/conf/global/settings/dam/processing/im-evil2"

}

}

}

}We'll be hitting the 43.png Asset. The Processing Profiles fetched by our code should be only

/conf/global/settings/dam/processing/profile-from-repo and /conf/global/settings/dam/processing/profile-from-repo2.

Now, let's write the test.

Content of src/test/java/com/mysite/local/tools/workflow/ProcessingProfilesUtilTest.java

@TestInstance(value = Lifecycle.PER_CLASS)

@ExtendWith(AemContextExtension.class)

class ProcessingProfilesUtilTest {

private final AemContext context = new AemContext();

@BeforeAll

void setUp() {

context.load().json("/contentSamples/dam.json", "/content");

}

@Test

@DisplayName("Given valid DAM tree, When getProcessingProfilesToApply, Then return valid processingProfile paths")

void testGetProcessingProfilePathsToApply() {

Resource resource = context.resourceResolver()

.getResource("/content/dam/test/test2/test3/43.png");

Set<String> actual = ProcessingProfilesUtil.getProcessingProfilePathsToApply(resource);

assertEquals(Set.of("/conf/global/settings/dam/processing/profile-from-repo",

"/conf/global/settings/dam/processing/profile-from-repo2"), actual);

}

}Obtaining Processing Profiles configuration

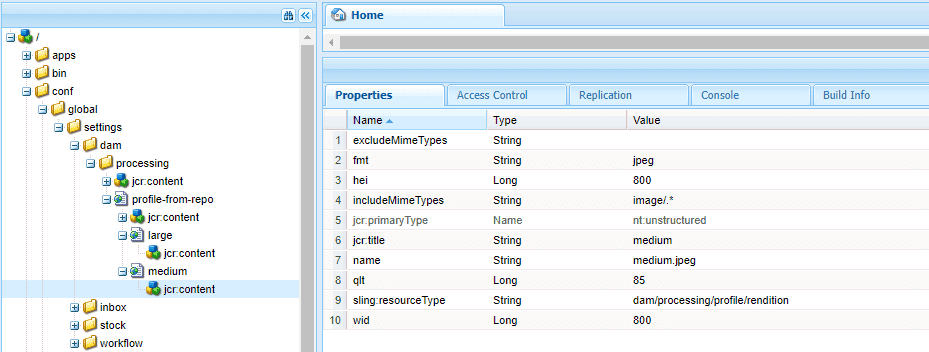

Let's see how Processing Profiles are stored in JCR.

Processing Profiles are stored in /conf/global/settings/dam/processing folder. Each rendition is a child node of a

Processing Profile node.

Let's model the Processing Profile in our code. It has a name and some renditions (we'll model it in a while).

Content of src/main/java/com/mysite/local/tools/workflow/ProcessingProfile.java

@Getter

@Builder

@EqualsAndHashCode

@ToString

public class ProcessingProfile {

private final String name;

private final List<Rendition> renditions;

}Note the Getter, Builder, EqualsAndHashCode, and ToString Lombok annotations.

Now let's model the rendition as a Sling Model. This class can be an inner static class of the class above.

@Getter

@Builder

@NoArgsConstructor

@AllArgsConstructor

@ToString

@EqualsAndHashCode

@Model(adaptables = Resource.class)

public static class Rendition {

@ValueMapValue

private String fmt;

@ValueMapValue

private Long hei;

@ValueMapValue

private Long wid;

@ValueMapValue

private Long qlt;

@ValueMapValue

private String includeMimeTypes;

@ValueMapValue

@Named("jcr:title")

private String title;

@ValueMapValue

private String name;

}We can create an adapter method for ProcessingProfile class to easily adapt a resource to an instance of our class:

Content of src/main/java/com/mysite/local/tools/workflow/ProcessingProfile.java

static ProcessingProfile fromResource(Resource resource) {

return ProcessingProfile.builder()

.name(resource.getName())

.renditions(

StreamSupport.stream(resource.getChildren().spliterator(), false)

// we only want the rendition nodes, not the jcr:content node

.filter(res -> !JcrConstants.JCR_CONTENT.equals(res.getName()))

.map(res -> Optional.ofNullable(res.getChild(JcrConstants.JCR_CONTENT))

// we're handling the jcr:content of the rendition, not the Processing Profile's!

.map(jcrContent -> jcrContent.adaptTo(Rendition.class))

)

.flatMap(Optional::stream)

.collect(Collectors.toList())

)

.build();

}Now, let's write a test!

Again, we'll be using AEM Mocks. The mocked content structure under /conf/global/settings/dam/processing is the

following:

Content of src/test/resources/contentSamples/processingProfiles.json

{

"jcr:primaryType": "nt:folder",

"profile-from-repo": {

"jcr:primaryType": "cq:Page",

"jcr:content": {

"jcr:primaryType": "nt:unstructured",

"jcr:title": "Profile from repo",

"sling:resourceType": "dam/processing/profile"

},

"large": {

"jcr:primaryType": "cq:Page",

"jcr:content": {

"jcr:primaryType": "nt:unstructured",

"jcr:title": "sample.with.dots",

"excludeMimeTypes": "",

"includeMimeTypes": "image/.*",

"fmt": "jpeg",

"hei": 1600,

"qlt": 85,

"wid": 1600,

"name": "sample.with.dots.jpeg",

"sling:resourceType": "dam/processing/profile/rendition"

}

},

"medium": {

"jcr:primaryType": "cq:Page",

"jcr:content": {

"jcr:primaryType": "nt:unstructured",

"jcr:title": "medium",

"excludeMimeTypes": "",

"includeMimeTypes": "image/.*",

"fmt": "jpeg",

"hei": 800,

"qlt": 85,

"wid": 800,

"name": "medium.jpeg",

"sling:resourceType": "dam/processing/profile/rendition"

}

}

},

"profile-from-repo2": {

"jcr:primaryType": "cq:Page",

"jcr:content": {

"jcr:primaryType": "nt:unstructured",

"jcr:title": "Profile from repo",

"sling:resourceType": "dam/processing/profile"

},

"large2": {

"jcr:primaryType": "cq:Page",

"jcr:content": {

"jcr:primaryType": "nt:unstructured",

"jcr:title": "sample.with.dots",

"excludeMimeTypes": "",

"includeMimeTypes": "image/.*",

"fmt": "jpeg",

"hei": 16002,

"qlt": 85,

"wid": 16002,

"name": "sample.with.dots2.jpeg",

"sling:resourceType": "dam/processing/profile/rendition"

}

},

"medium2": {

"jcr:primaryType": "cq:Page",

"jcr:content": {

"jcr:primaryType": "nt:unstructured",

"jcr:title": "medium",

"excludeMimeTypes": "",

"includeMimeTypes": "image/.*",

"fmt": "jpeg",

"hei": 8002,

"qlt": 85,

"wid": 8002,

"name": "medium2.jpeg",

"sling:resourceType": "dam/processing/profile/rendition"

}

}

}

}And the test looks like the following:

private final AemContext context = new AemContext();

@BeforeAll

void setUp() {

context.load().json(

"/contentSamples/processingProfiles.json",

"/conf/global/settings/dam/processing"

);

}

@Test

@DisplayName("Given ProcessingProfile resource, When fromResource, Then return valid ProcessingProfile")

void testFromResource() {

Stream<Resource> input = Stream.of(

"/conf/global/settings/dam/processing/profile-from-repo2",

"/conf/global/settings/dam/processing/profile-from-repo"

)

.map(path -> context.resourceResolver().getResource(path));

List<ProcessingProfile> actual = input.map(ProcessingProfile::fromResource)

.collect(Collectors.toList());

assertEquals(List.of(

ProcessingProfile.builder()

.name("profile-from-repo2")

.renditions(

List.of(

Rendition.builder()

.name("sample.with.dots2.jpeg")

.title("sample.with.dots")

.includeMimeTypes("image/.*")

.fmt("jpeg")

.hei(16002L)

.qlt(85L)

.wid(16002L)

.build(),

Rendition.builder()

.name("medium2.jpeg")

.title("medium")

.includeMimeTypes("image/.*")

.fmt("jpeg")

.hei(8002L)

.qlt(85L)

.wid(8002L)

.build()

)

).build(),

ProcessingProfile.builder()

.name("profile-from-repo")

.renditions(

List.of(

Rendition.builder()

.name("sample.with.dots.jpeg")

.title("sample.with.dots")

.includeMimeTypes("image/.*")

.fmt("jpeg")

.hei(1600L)

.qlt(85L)

.wid(1600L)

.build(),

Rendition.builder()

.name("medium.jpeg")

.title("medium")

.includeMimeTypes("image/.*")

.fmt("jpeg")

.hei(800L)

.qlt(85L)

.wid(800L)

.build()

)

).build()

), actual);

}Creating the renditions

Now, that we have all the information we need, we have to actually make the renditions. We'll be using the RenditionMaker and the Gfx.

Content of src/main/java/com/mysite/local/tools/workflow/LocalRenditionMakerProcess.java

@Reference

private Gfx gfx;

@Reference

private RenditionMaker renditionMaker;

@Reference

private MimeTypeService mimeTypeService;

// ...

private NamedRenditionTemplate createRenditionTemplate(Asset asset, String renditionName, int width,

int height, int quality) {

Plan plan = gfx.createPlan();

plan.layer(0).set("src", asset.getPath());

NamedRenditionTemplate template = NamedRenditionTemplate.builder()

.gfx(gfx)

.mimeType(mimeTypeService.getMimeType(renditionName))

.renditionName(renditionName)

.plan(plan)

.build();

Instructions instructions = plan.view();

instructions.set("wid", width);

instructions.set("hei", height);

instructions.set("fit", "constrain,0");

instructions.set("rszfast", quality <= 90);

if (StringUtils.equalsAny(template.getMimeType(), "image/jpg", "image/jpeg")) {

instructions.set("qlt", quality);

} else if ("image/gif".equals(template.getMimeType())) {

instructions.set("quantize", "adaptive,diffuse," + quality);

}

String fmt = StringUtils.substringAfter(template.getMimeType(), "/");

if (StringUtils.equalsAny(fmt, "png", "gif", "tif")) {

fmt += "-alpha";

}

instructions.set("fmt", fmt);

return template;

}

@Getter

@Builder

private static class NamedRenditionTemplate implements RenditionTemplate {

private Plan plan;

private String renditionName;

private String mimeType;

private Gfx gfx;

@Override

public Rendition apply(Asset asset) {

return Optional.ofNullable(asset.adaptTo(Resource.class))

.map(Resource::getResourceResolver)

.map(resourceResolver -> {

Rendition rendition = null;

try (InputStream stream = gfx.render(this.plan, resourceResolver)) {

if (stream != null) {

rendition = asset.addRendition(this.renditionName, stream, this.mimeType);

}

} catch (IOException e) {

log.error("Exception occurred while generating the rendition.", e);

}

return rendition;

})

.orElse(null);

}

}Updating rendition metadata

There's one more thing!

The new AEM interface, introduced in the AEM as a Cloud Service version, has a nice feature of showing the exact size of a rendition in the Asset details view.

We have to populate those values in rendition's metadata under tiff:ImageWidth and tiff:ImageLength properties.

First, let's obtain the dimension of the rendition.

Content of src/main/java/com/mysite/local/tools/workflow/WorkflowUtil.java

static Optional<Dimension> getRenditionSize(Rendition rendition) {

return Optional.ofNullable(rendition)

.map(rend -> {

Dimension dimension = null;

try {

BufferedImage image = ImageIO.read(rend.getBinary().getStream());

dimension = new Dimension(image.getWidth(), image.getHeight());

} catch (IOException | RepositoryException e) {

log.error("Error occurred while reading the rendition.", e);

}

return dimension;

});

}We can test this piece of code on a few sample images.

Content of src/test/java/com/mysite/local/tools/workflow/WorkflowUtilTest.java

@Test

@DisplayName("Given images inputStream, When getRenditionSize, Then return valid image dimensions")

void testGetRenditionSize() {

Rendition rendition = mock(Rendition.class);

Binary binary = mock(Binary.class);

when(rendition.getBinary()).thenReturn(binary);

Stream.of(

WorkflowUtilTest.class.getResourceAsStream("/images/testImage.bmp"),

WorkflowUtilTest.class.getResourceAsStream("/images/testImage.png"),

WorkflowUtilTest.class.getResourceAsStream("/images/testImage.jpg")

)

.map(inputStream -> {

try {

when(binary.getStream()).thenReturn(inputStream);

} catch (RepositoryException e) {

fail("Exception occurred.");

}

return WorkflowUtil.getRenditionSize(rendition);

})

.forEach(optDimension -> {

assertTrue(optDimension.isPresent());

Dimension dimension = optDimension.get();

assertEquals(4, dimension.width);

assertEquals(6, dimension.height);

});

}testImages are sample 4x6px images in different formats located in src/test/resources/iamges directory.

Now, let's write the metadata.

Content of src/main/java/com/mysite/local/tools/workflow/LocalRenditionMakerProcess.java

private void updateRenditionMetadata(String renditionPath, ResourceResolver resourceResolver) {

Resource renditionResource = resourceResolver.getResource(renditionPath);

if (renditionResource != null) {

Optional<Dimension> optDimension = WorkflowUtil.getRenditionSize(renditionResource.adaptTo(Rendition.class));

if (optDimension.isPresent()) {

Dimension dimension = optDimension.get();

try {

Node renditionNode = Objects.requireNonNull(renditionResource.adaptTo(Node.class));

Node jcrContent = renditionNode.getNode(JcrConstants.JCR_CONTENT);

jcrContent.addMixin("dam:Metadata");

Node metadata = jcrContent.addNode("metadata", JcrConstants.NT_UNSTRUCTURED);

metadata.setProperty("tiff:ImageWidth", dimension.width);

metadata.setProperty("tiff:ImageLength", dimension.height);

resourceResolver.commit();

} catch (RepositoryException | PersistenceException e) {

log.error("Error while updating metadata for rendition.", e);

}

} else {

log.error("Could not obtain dimensions for created rendition {}", renditionPath);

}

} else {

log.error("Could not obtain resource for created rendition {}", renditionPath);

}

}Putting it all together

Now, that we have all the tools in place, let's put them all together.

Content of src/main/java/com/mysite/local/tools/workflow/LocalRenditionMakerProcess.java

@Override

public void execute(WorkItem workItem, WorkflowSession workflowSession, MetaDataMap metaDataMap) {

String assetPath = WorkflowUtil.getAssetPath(workItem);

if (StringUtils.isNotBlank(assetPath)) {

ResourceResolver resourceResolver = Objects.requireNonNull(workflowSession.adaptTo(ResourceResolver.class));

Resource assetResource = resourceResolver.getResource(assetPath);

if (assetResource != null) {

Set<String> processingProfilePaths = ProcessingProfilesUtil.getProcessingProfilePathsToApply(assetResource);

processingProfilePaths.stream()

.map(resourceResolver::getResource)

.filter(Objects::nonNull)

.map(ProcessingProfile::fromResource)

.forEach(processingProfile -> processProfile(assetResource, processingProfile, resourceResolver));

} else {

log.error("Resource {} does not exist.", assetPath);

}

} else {

log.error("Could not obtain path of the asset to process");

}

}

private void processProfile(Resource assetResource, ProcessingProfile processingProfile,

ResourceResolver resourceResolver) {

Asset asset = assetResource.adaptTo(Asset.class);

processingProfile.getRenditions()

.forEach(rendition -> {

processRendition(processingProfile.getName(), asset, rendition, resourceResolver);

});

}The configuration

To finish everything up, you need to add the Process Step created above to the DAM Update Asset workflow. To do this,

simply add a node in /conf/global/settings/workflow/models/dam/update_asset/jcr:content/flow

Content of src/main/content/jcr_root/conf/global/settings/workflow/models/dam/update_asset/jcr:content/flow

<myCustomProcess

jcr:primaryType="nt:unstructured"

jcr:title="Generate Cloud Renditions"

sling:resourceType="cq/workflow/components/model/process">

<metaData

jcr:primaryType="nt:unstructured"

PROCESS="com.mysite.local.tools.workflow.LocalRenditionMakerProcess"

PROCESS_AUTO_ADVANCE="true"/>

</myCustomProcess>Don't forget to reflect this change in /var/workflow/models/dam/update_asset/nodes along with all necessary

transitions (it's best to sync the workflow from AEM's UI and then sync this node to your repo).

Content of src/main/content/jcr_root/var/workflow/models/dam/update_asset/nodes

<nodeXYZ

jcr:primaryType="cq:WorkflowNode"

title="Generate Cloud Renditions"

type="PROCESS">

<metaData

jcr:primaryType="nt:unstructured"

PROCESS="com.mysite.local.tools.workflow.LocalRenditionMakerProcess"

PROCESS_AUTO_ADVANCE="true"/>

</nodeXYZ>Last, but not least, add the required entries in filter.xml of the module.

Content of src/main/content/META-INF/vault/filter.xml

<?xml version="1.0" encoding="UTF-8"?>

<workspaceFilter version="1.0">

<filter root="/conf/global/settings/workflow/models/dam/update_asset"/>

<filter root="/var/workflow/models/dam/update_asset"/>

</workspaceFilter>Build & Deploy

Since we're using Gradle AEM Plugin, build and deployment is as easy as typing

gradle packageDeployNote: you can use Gradle Wrapper as well.

Done!

Summary

As shown above, there may be many obstacles on the way to developing for AEM as a Cloud Service locally. Thankfully, most of the basic AEM mechanisms still work on local SDK and with some additional tweaking, AEM as a Cloud Service specific behaviors can be replicated in local development.

The code developed in this tutorial is available on Cognifide's Github AEM Cloud Renditions Tool.

Hero image by rawpixel.com - www.freepik.com, opens in a new window