Artificial Intelligence and Machine Learning are commonly used in advanced analysis of graphic materials. Autonomous cars, face recognition and detection of objects are some of many examples that those methods can be used for. Have you ever wondered how computers learned to see like humans? Read my article to find out!

What is Image Recognition?

It is natural for people to recognize objects, places, people and animals in a picture, it comes to us without much thought. We can easily describe what is in the picture, read the text on it or complete more difficult tasks like distinguishing muffins from a chihuahua. This process is quite hard for a computer to imitate.

The domain that deals with how computers can gain high-level understanding from digital images or videos is called Computer Vision and is a subcategory of Artificial Intelligence. Its main goal is to understand and automate the way people see.

Image recognition is a subset of Computer Vision that represents a set of methods for detecting and analyzing images. It is a technology that is capable of identifying objects in an image, after analysing its pixels.

Convolutional neural networks (CNN) history

David H. Hubel and Torsten Wiesel performed a series of experiments on cats in 1958 and 1959. As a result, we learned that many neurons in the visual cortex react only to visual stimuli located in a limited region of the visual field. Additionally, some neurons have larger receptive fields, and they react to more complex patterns that are combinations of the lower-level patterns.

These observations led to the idea that the higher-level neurons are based on the outputs of neighboring lower-level neurons. These studies inspired the neocognitron which gradually evolved into convolutional neural networks. Convolutional Neural Networks is one of the variants of neural networks that is used in the field of Computer Vision.

Architecture

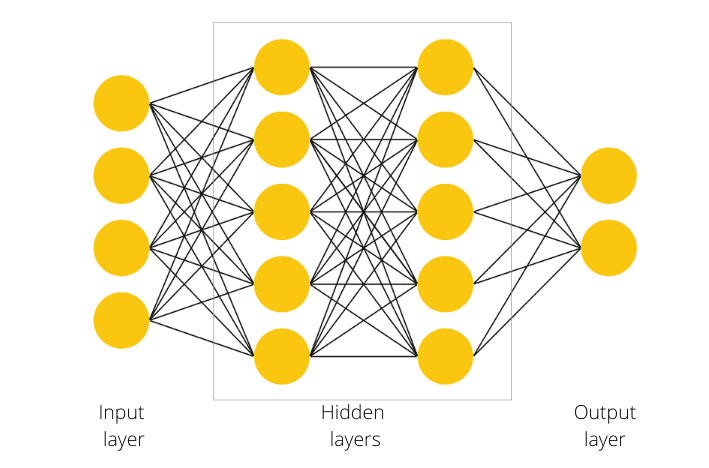

Using Convolutional Neural Networks (CNN) computers can achieve great performance on some complex visual tasks. The architecture of CNN consist of neurons and layers.

Neurons are elementary units of a neural network that mimics the structure of the human brain. They are mathematical functions that calculate the weighted sum of multiple inputs' and outputs' activation values. Each layer in a neural network consists of a number of neurons.

The CNN consists of an input layer, multiple hidden layers, and an output layer. The deeper the hidden layer, the more

complex objects we can recognize. The initial layers recognize edges, corners, circles, squares. Deeper layers can

detect more complex patterns e.g. eyes, ears. The deeper layers are able to recognize people, animals and places.

How does the CNN process images?

At the beginning, the input layer takes the pixels of the image in a form of arrays of numbers. Then, the hidden layers perform feature extraction by executing calculations and manipulations.

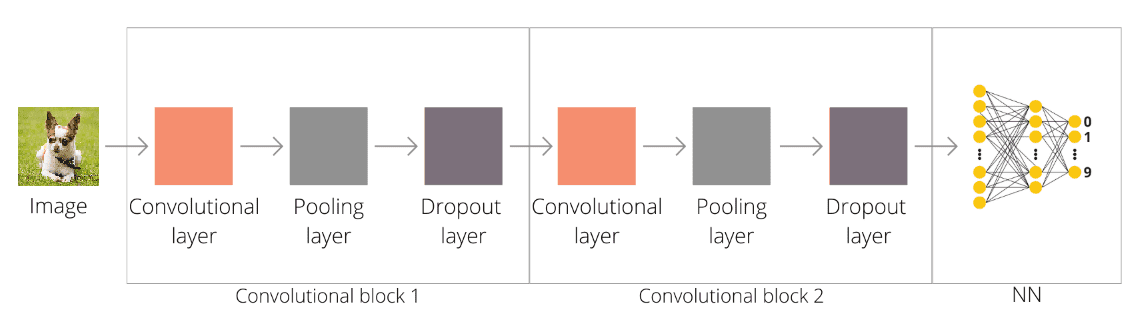

There are three most important types of layers in CNN: Convolutional, Pooling and Fully Connected layers.

The convolutional layer consists of number of filters which are matrices composed of random numbers, dimensions are defined by the user. Filters are used to detect patterns, e.g. one can find straight lines, another circles etc. Each filter slides (convolves) over the pixels of the image. During convolution, the dot product of the input and a filter is computed.

Every image is considered as a matrix of pixel values. For the sake of simplicity, an example 5x5 image will have white

and black pixels, so its representation consists of 0 and 1. First we multiply the values from the orange part of the

input matrix (reference matrix) with the values of the filter matrix. The result is the sum of the return values. The

first result value will be placed in the upper left corner of the output matrix. Then the calculation of the dot product

for the next 3x3 areas of the input matrix takes place. Each subsequent value is placed according to the reference

matrix shift. The filter convolves the entire input, producing a new representation of the input.

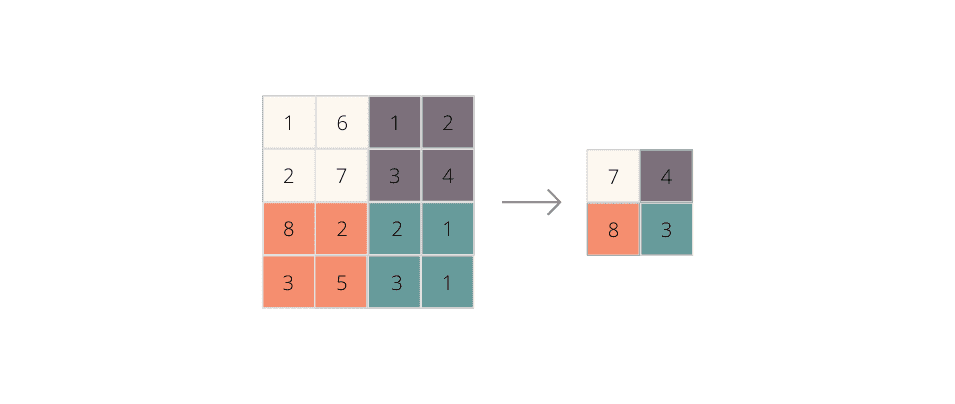

In the pooling layers downsampling takes place. It reduces the dimensionality of the feature map. In the example

below we can see how max pooling layer with a 2x2 filter works. It calculates a max value for each patch of the matrix.

The objective of a fully connected layer is to take the results of the previous layers and use them to classify the image into a category.

Building Neural Network from the top using Keras

To create a neural network, it is necessary to collect data to train the model. We will use the CIFAR dataset, that contains 60000 images in the following 10 classes: airplane, automobile, bird, cat, deer, dog, frog, horse, ship, truck. There are 50000 training images and 10000 test images and each image has a resolution of 32x32 pixels.

The picture below shows the convolutional neural network architecture that we will create using Keras.

Let's start with the code to train the network.

import keras

from keras.datasets import cifar10

from keras.models import Sequential

from keras.layers import Dense, Dropout, Flatten, Conv2D, MaxPooling2D

from pathlib import Path

# loading the data set

# x_train and x_test are pictures,

# y_train and y_test are numbers 0-9 which tell which categories the picture belongs to.

(x_train, y_train), (x_test, y_test) = cifar10.load_data()

# normalizing data set to 0-1 range

x_train = x_train.astype('float32')

x_test = x_test.astype('float32')

x_train /= 255

x_test /= 255

# converting class vectors to binary class matrices

# Labels are single values from 0 to 9. Instead, we want each label to be an array

# with on element set to 1 and the rest set to 0.

y_train = keras.utils.to_categorical(y_train, 10)

y_test = keras.utils.to_categorical(y_test, 10)

# creating a model and adding layers

model = Sequential()

# Convolutional block #1

# 2D Convolutional Layer (32 filters with window size 3x3) detects properties of picture (e.g. line, circle)

# and returns array showing where such property can be found.

model.add(Conv2D(32, (3, 3), padding='same', input_shape=(32, 32, 3), activation="relu"))

model.add(Conv2D(32, (3, 3), activation="relu"))

# Pooling Layer reduces size of the image, by choosing the most important property in 2x2 square.

# Used to drop unimportant details.

model.add(MaxPooling2D(pool_size=(2, 2)))

# randomly drops the data to improve training performance. Helps to prevent overfitting.

model.add(Dropout(0.25))

# Convolutional block #2

model.add(Conv2D(64, (3, 3), padding='same', activation="relu"))

model.add(Conv2D(64, (3, 3), activation="relu"))

model.add(MaxPooling2D(pool_size=(2, 2)))

model.add(Dropout(0.25))

# collapsing 2D array into one dimension

model.add(Flatten())

# Number of nodes (512) was choosen at random and tuned manually.

# Rectified Linear Unit "relu" was choosen for performance.

model.add(Dense(512, activation="relu"))

model.add(Dropout(0.5))

# We expect 10 possible answers, so number of output nodes is equal to 10.

# Activation function softmax causes all values to be in the interval (0-1) and add up to 1.

# They describe probabilities of particular answers.

model.add(Dense(10, activation="softmax"))

# compiling the model

# categorical_crossentropy - loss function used for categorization problems

# Adaptive Moment Estimation "adam" - update network weights

# accuracy - function that is used to judge the performance of a model

model.compile(

loss='categorical_crossentropy',

optimizer='adam',

metrics=['accuracy']

)

# training the model

# batch_size - the number of training examples in one forward/backward pass

# epochs - number of runs through entire dataset

# shuffle - prevents training against ordering

model.fit(

x_train,

y_train,

batch_size=64,

epochs=30,

validation_data=(x_test, y_test),

shuffle=True

)

# saving the network

model_structure = model.to_json()

f = Path("model_structure.json")

f.write_text(model_structure)

# saving the network weights

model.save_weights("model_weights.h5")Now we can test the created model. We will give a chihuahua as the input photo and see what the model predicts.

from keras.models import model_from_json

from pathlib import Path

from keras.preprocessing import image

import numpy as np

class_labels = [

"Plane",

"Car",

"Bird",

"Cat",

"Deer",

"Dog",

"Frog",

"Horse",

"Boat",

"Truck"

]

# loading the json file that contains the model's structure

f = Path("model_structure.json")

model_structure = f.read_text()

model = model_from_json(model_structure)

# reloading the model's trained weights

model.load_weights("model_weights.h5")

# loading an image file to test, resizing it to 32x32 pixels (as required by this model)

img = image.load_img("chihuahua.jpg", target_size=(32, 32))

# converting the image to a numpy array

image_to_test = image.img_to_array(img)

# adding a fourth dimension to the image (since Keras expects a list of images, not a single image)

list_of_images = np.expand_dims(image_to_test, axis=0)

# making a prediction model

results = model.predict(list_of_images)

# since we are only testing one image, we only need to check the first result

single_result = results[0]

# we will get a likelihood score for all 10 possible classes. Find out which class had the highest score.

most_likely_class_index = int(np.argmax(single_result))

class_likelihood = single_result[most_likely_class_index]

# get the name of the most likely class

class_label = class_labels[most_likely_class_index]

# printing the result

print("This is image is a {} - Likelihood: {:2f}".format(class_label, class_likelihood))Below, we can see the result printed in the console:

This is image is a Dog - Likelihood: 1.000000The result is the label with the highest probability. In our case, CNN rightly said that a Chihuahua is a dog.

Azure Computer Vision

To use Image Recognition in your project, it is not necessary to create your own network. The alternative is to use ready-made cloud solutions such as Microsoft Azure or Google Vision AI. Azure Computer Vision provides pre-trained machine learning models that can be used for Image Recognition tasks. You can create Computer Vision applications through SDK or by calling the REST API directly. The programming languages supported by the SDK are .NET, Python, Java, Node, Go. As a result, we get a description of the objects in the picture. Azure Computer Vision detects: objects, brands, faces, image types, domain-specific content, the color scheme. Additionally, we can generate a thumbnail, get the area of interest and describe an image.

We can test online how Azure Computer Vision works at https://azure.microsoft.com/en-us/services/cognitive-services/computer-vision/, using our own picture. In the "See it in action" section click "Upload" and then "Browse" and select a photo from the disk.

I used a landscape photo for testing.

The received response in JSON format is presented below.

| FEATURE NAME: | VALUE |

|---|---|

| Objects | [] |

| Tags |

[

{ "name": "water", "confidence": 0.9876069 },

{ "name": "lake", "confidence": 0.981662154 },

{ "name": "nature", "confidence": 0.978817 },

{ "name": "mountain", "confidence": 0.934187233 },

{ "name": "tree", "confidence": 0.926067352 },

{ "name": "landscape", "confidence": 0.8743861 },

{ "name": "cloud", "confidence": 0.826629 },

{ "name": "sky", "confidence": 0.820298433 },

{ "name": "reflection", "confidence": 0.6055093 },

{ "name": "canyon", "confidence": 0.5721667 }

]

|

| Description |

{

"tags": [

"water", "nature", "mountain", "small", "sitting",

"lake", "orange", "large", "boat", "man", "riding",

"blue", "river", "ocean", "group"

],

"captions": [

{

"text": "water next to a mountain",

"confidence": 0.877095938

}

]

}

|

| Image format |

"Jpeg" |

| Image dimensions |

2847 x 4226 |

| Black and white |

false |

| Adult content |

false |

| Adult score |

0.00173477619 |

| Gory |

false |

| Gore Score |

0.00524360733 |

| Racy |

false |

| Racy score |

0.002463461 |

| Categories |

[

{ "name": "outdoor_", "score": 0.00390625 },

{ "name": "people_swimming", "score": 0.80859375 }

]

|

| Faces |

[] |

| Dominant color background |

"Black" |

| Dominant color foreground |

"Green" |

| Accent Color |

#1295B9 |

Azure described the image as "water next to a mountain", and detected water, lake, nature, tree, clouds in it.

Summary

We saw what Image Recognition is and how CNN works. We've used Python with Keras to create your own Convolutional Neural Network and we got to know a Cloud solution from Azure. Using Azure Computer Vision is a good choice for people starting their adventure with image processing. A better solution, for more advanced users, would be to create one's own models that will give more configuration options.

Hero image by Fitore F on Unsplash, opens in a new window

Featured image by Moritz Kindler on Unsplash, opens in a new window