Apache Camel is the Swiss Army knife for developers doing integration work. Read how you can use it to perform complex operations on your AEM platform.

Have you ever tried to import huge amounts of data to AEM at once? If so, you'll know that the whole process is very complex and that it will always change and evolve.

Integrating AEM with external services happens more and more these days. One such integration is feeding AEM with data coming from an external source and creating user friendly content on top of it. Usually simple importing is not enough and data must pass through additional processing before it finally goes public.

I’d like to share with you the process that we follow when integrating with an external data source. The structure of data as well as the way of processing changes frequently. The import process has to be scalable and flexible to adapt to changes without huge development effort. Let's talk about Apache Camel.

What is Apache Camel?

To understand what Apache Camel is, the first thing is to understand Integration Patterns. But let's take a shortcut and quote the best Apache Camel descriptions from Stack Overflow:

Apache Camel is messaging technology glue with routing. It joins together messaging start and end points allowing the transference of messages from different sources to different destinations. For example: JMS -> JSON, HTTP -> JMS or funneling FTP -> JMS, HTTP -> JMS, JSON -> JMS

Apache Camel is the Swiss Army knife for developers doing integration work.

Apache Camel is an open source Java framework that focuses on making integration easier and more accessible to developers.

Sounds interesting, right?

In the same way that Factories, Proxies, Builders, Facades, Commands (Software Design Patterns) help solve similar programming problems, Integration Patterns are a set of reusable solutions for system integration challenges.

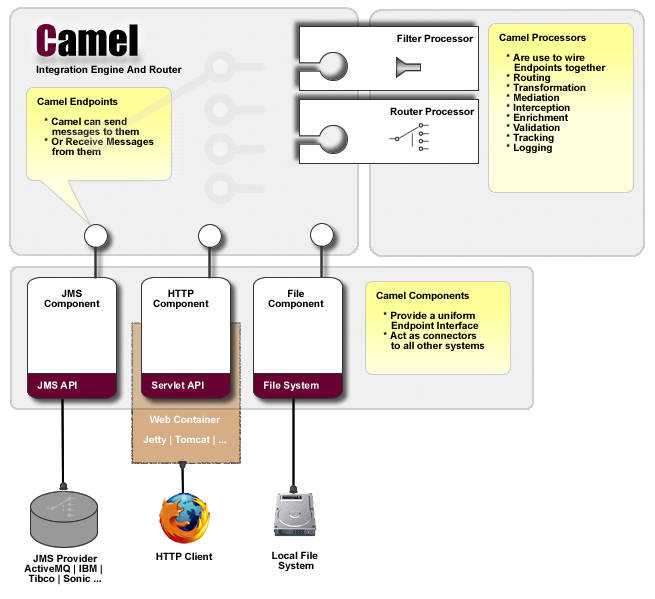

Basic principles: Processors, Endpoints, Components

Apache Camel power is based on three principles:

- Endpoint is an entry or an exit channel for managing communication between system parts.

- Component is an endpoint factory.

- Processor is a unit responsible for transforming/processing objects that travel within messages exchanged between endpoints.

source: http://camel.apache.org/

For example, when we want to integrate a JMS system that triggers saving some data in the database and then sends an email to the administrator about database update, we will have 3 Components:

- JMS (e.g. ActiveMQ),

- Database (e.g. MySQL),

- Email Server (e.g. Geronimo mail).

Each of these components will have an associated Camel endpoint:

- JMS will have Queue where a message will be posted and read by the system,

- Database will have a connection pool,

- Email Server will have e.g. SMTP server address to send emails to.

Sample processors could be:

- MessagesProcessor that will examine the message and decide if the database update should be performed.

- DatabaseProcessor that will convert the message to a database object.

- EmailProcessor that will send an email with information about the database update result.

From XML file through JCR nodes to replication - integration patterns in use

Let's consider a scenario where an external source provides data (set of products, manufacturers and product categories) in XML format. Each product will end with its own AEM Page so that it can be presented to the end user. Product has related data e.g. manufacturer and product category - each with its own unique page.

We will go through the example journey of one product from the XML entity to the front end page. In order to perform this transformation, the following steps must be taken:

- Parse XML entity into POJO (a Java object representation of the entity),

- Validate entity,

- Store entity in JCR / create page,

- Replicate this page to AEM publish instances,

- Asynchronously download product image and replicate it to AEM publish instances.

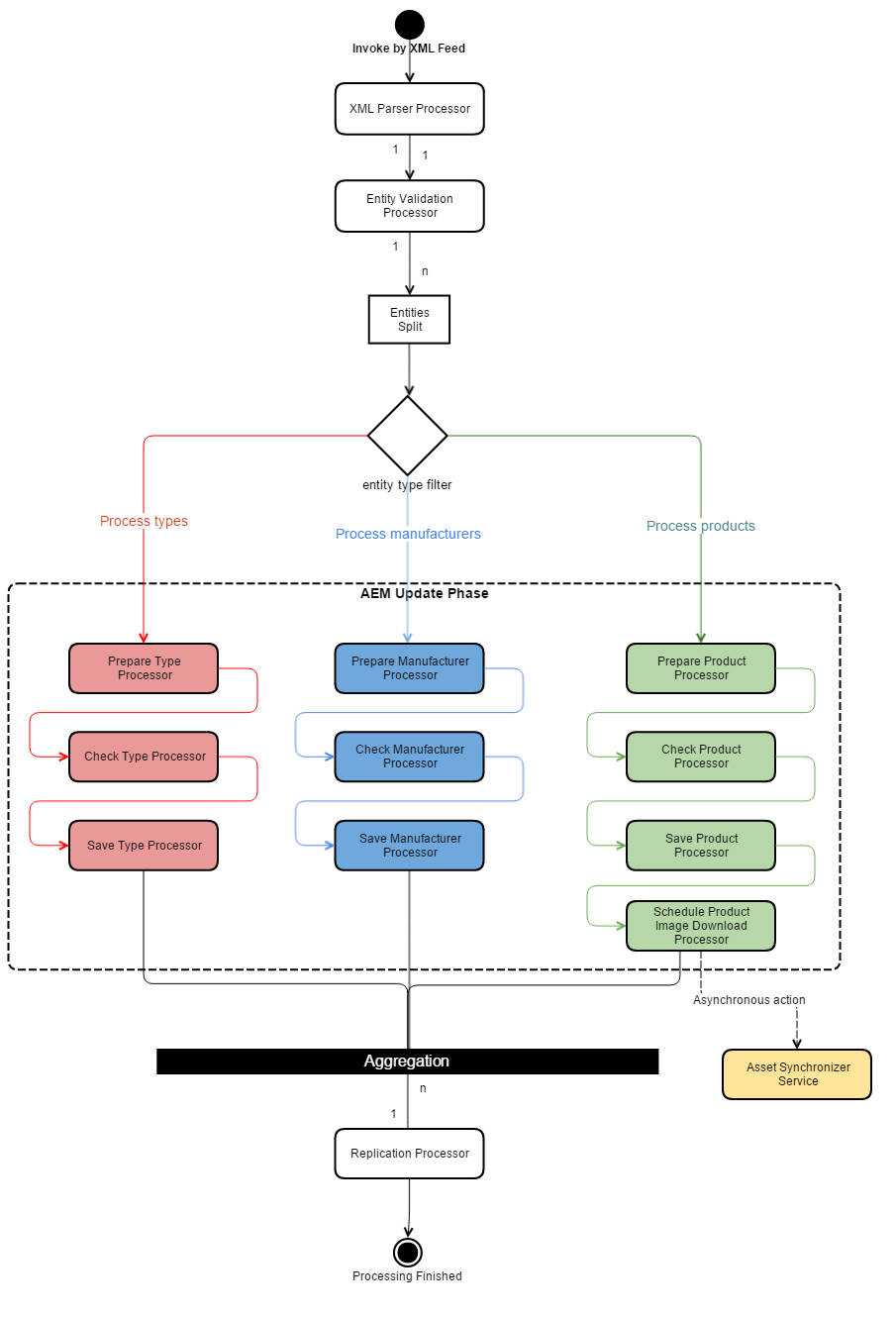

Diagram below presents a whole path for the batch update.

In the process illustrated above, several Integration Patterns were used.

Composed Message Processor

This complex integration pattern can be broken down into several basic elements that were assembled together.

- Splitter - divides the batch of entities into a stream of independent items. From this point each entity will be processed separately (but maybe simultaneously with others) up to aggregation.

- Router - after splitting entities, additional routing is required, because there are several types of entities and each type has its own way of processing (e.g. products should have downloaded image).

- Aggregator - after custom processing each entity waits for a common phase. Aggregator gathers all entities and has knowledge of the total number of items to aggregate as well as the time it has to wait for their arrival before performing aggregation. After joining all entities together again, the next steps will be performed (e.g. batch replication that is much faster than replicating entities one by one).

In our scenario above, there is a list of entities (products, manufacturers, types). Splitter splits the list so that each entity is treated separately, routed and processed by its own path in order to be joined in the aggregator.

The following snippet is a piece of Java code using Apache Camel API to construct Composed Message Processor routing:

//processor instances may be e.g. OSGi Components

@Reference

private PrepareProductProcessor prepareProductProcessor;

// ...

from("direct:processEntityMessages")

.split(body()) //process each entity separately from here

.choice()

.when(body().isInstanceOf(ProductEntity.class)).to("direct:processProduct")

.when(body().isInstanceOf(ManufacturerEntity.class)).to("direct:processManufacturer")

.when(body().isInstanceOf(TypeEntity.class)).to("direct:processType")

.otherwise().to("direct:ErrorEndpoint")

.endChoice();

from("direct:processProduct")

.process(prepareProductProcessor)

.process(checkProductProcessor)

.process(saveProductProcessor)

.process(scheduleProductImageDownloadProcessor)

.multicast()

.to("direct:aggregationStep")

.to("direct:asynchronousDAMUpdate")

.parallelProcessing();

from("direct:processManufacturer")

.process(prepareManufacturerProcessor)

.process(checkManufacturerProcessor)

.process(saveManufacturerProcessor)

.to("direct:aggregationStep");

from("direct:processType")

.process(prepareTypeProcessor)

.process(checkTypeProcessor)

.process(saveTypeProcessor)

.to("direct:aggregationStep");

from("direct:aggregationStep")

.aggregate(header("id")), new CustomAggregationStrategy()) //aggregate all separate entiteis and process batch from now

.to("direct:batchReplicate");Multiple endpoints (Recipent List)

During products custom processing, there is a need to perform an asynchronous step - downloading of a product image. Therefore, each processed product should be posted into two recipient channels simultaneously: one leading to the aggregator and the second, which ends with a runner of asynchronous jobs responsible for image downloading. This is a great pattern to integrate AEM Workflows with complex processing. Asynchronous download and AEM Rendition Workflow can be executed concurrently to further processing.

.multicast().parallelProcessing()

.to("direct:lastProductProcessing")

.to("direct:asynchronousDAMUpdate")Error handling (Dead Letter Channel)

One more Integration Pattern was used in the solution above, although it was not mentioned - error handling in a complex system. Dead Letter Channel is a pattern, which provides handling messages that were lost in processing, so that may be at least logged or reported in some other way.

onException(CustomProcessingException.class).handled(true);

errorHandler(deadLetterChannel("direct:Error"));

from("direct:Error").process(new ErrorHandlingProcessor());Integrating with AEM

Using Apache Camel in an AEM application is really easy. Configuration requires only a few simple steps.

First thing is to add camel-core to your project, e.g. in maven project it would look like this:

<dependency>

<groupId>org.apache.camel</groupId>

<artifactId>camel-core</artifactId>

<version>XXXX</version>

</dependency>And make sure that this library has been installed in AEM as an OSGi bundle, by verifying its status in the Apache Felix

System console (/system/console/bundles)

Next thing is to create Camel Context entry point and trigger processing:

public void execute() {

CamelContext camelContext = null;

try {

camelContext = new DefaultCamelContext();

camelContext.setAllowUseOriginalMessage(false);

camelContext.addRoutes(new CustomRouteBuilder());

camelContext.start();

ProducerTemplate template = camelContext.createProducerTemplate();

template.sendBody("direct:start", new CustomProcessingBody());

} catch (Exception e) {

LOGGER.error("Fatal error during camel routing setup", e);

} finally {

if (camelContext != null) {

try {

camelContext.stop();

} catch (Exception e) {

LOGGER.error("Can't stop camel context", e);

}

}

}

}And this is basically it. CustomRouteBuilder defines the sequence of steps that should be performed (e.g. splitting,

aggregating, routing etc.). Snippets presented earlier in the paragraph about Integration Patterns are actually parts

of Route Builder.

Summary

Apache Camel is a great tool that supports complex operations on AEM platform. Every time extra processing requirement appears, changing the whole mechanism is as simple as implementing a single step (processor).

Camel allows integration with many services through its built in connectors. In the case of AEM, it can be very effectively used in conjunction with AEM workflows in order to trigger and split complex operations, aggregate results and perform batch replication. It has good error handling options and provides failover mechanics allowing you to retry operations when required. Last but not least, there is almost no entry knowledge required in order to start using Apache Camel, because defining routing is intuitive and resembles writing natural language sentences.

Useful Links

Hero image by Reiseuhu, opens in a new window

Featured image by Reiseuhu, opens in a new window

This post was originally published on: https://www.cognifide.com/our-blogs/technology/integrating-aem-with-apache-camel, opens in a new window